(Over-) Complexity in Conspiracy

Regarding the vexing problem of when to dismiss possibilities as over-burdened by complexity.

This post:

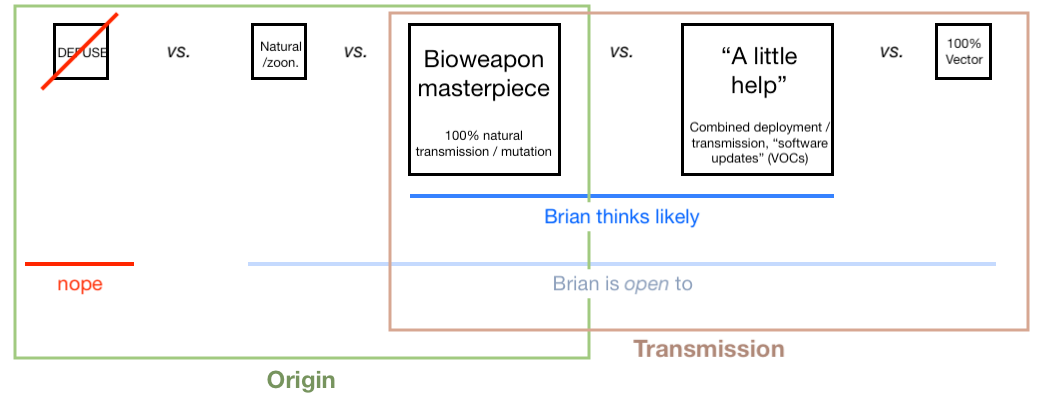

Clarifies my stance on 100% transmission vs. mixed vs. the zero-transmission model. (I lean between the first two.)

Offers a rumination on the similarities between 9-11 and SARS-CoV-2 and the difficulty in using complexity as a rubric for discerning the truth.

I promise readers that Unglossed will return to grounded biology and study-review drudgery mode this week. I’ll even bring back the summary cards!

Not promise-promise, mind you, but “acknowledge a content focus problem”-promise.

Regardless, I would like to devote one more post (for now) to clarifying my frustratingly unclear stance on repeat release, as well as to dive into some third rail 9-11 commentary.

Why should those two subjects be related? I have come to believe that a simple case can be made from 9-11 that one cannot make any valid decision matrix for dismissing or entertaining conspiracy theories in the modern world. And by coincidence, I was featured in the after-talk of Kevin McCairn’s astounding interview of Richard Gage regarding the evidence for controlled demolition of WTCs 1, 2, and 7.

So, readers, either don those tinfoil hats or break out the anti-tin-foil-hat-wearer-spray, and proceed or turn back accordingly!

(If there’s a more efficient recipe for intellectual isolation than publishing biowarfare conspiracy-theorizing and statistical fact-checking posts in the same week, I’ll be sure to take it up once I think of it.)

Clarifying my (possibly to-be-revised) stance on repeat release vs. “bioweapon masterpiece.”

The other day, my recent forays into the “infectious clone controversy” were characterized by Charles Rixey in his chat with Kevin McCairn on the subject. This chat begins at 3 hours and 25 minutes of McCairn’s Richard Gage stream.

Generally, I find it difficult to consume information in video format; but McCairn’s streams are always illuminating, especially for dispelling the comically flawed evidence surrounding graphene oxide, nano-assembly, and other non-mRNA red herrings that have either been planted by “controlled opposition” or have endogenously emerged to siphon attention and money from the Covid vaccine skeptical ecosystem. I intend to highlight some of his videos on that subject later. With that said, viewers might find McCairn’s taboo-obliterating persona somewhat off-putting; consider yourself warned (headphones are recommended if watching in mixed company).

Rixey, I believe, mischaracterizes my stance on repeat release, but this is entirely fair. My stance is essentially hyper-nuanced and (I am sure) frustrating. Additionally, while I am not overly-familiar with the work of PANDA in general, I am sure my recent “endorsement” of Nick Hudson’s post and my longstanding hostility to the “gain of function” meta-narrative serve to cast my beliefs as more extreme than they are. Lastly, I cannot guarantee that I will navigate the task of trying not to be outright dismissive of vector release and pandering to a fad successfully.1

Thus, to clarify, here is the spectrum of what I lean toward, and what I am open to, on the subjects of the origin and “performance” of SARS-CoV-2 as the agent of widely observed disease since 2019. This is essentially my position as of October:

Unbelievably not that radical?

In the category of “likely” is 100% authentic (post-release) transmission and mutation; or, some mix of the same and lab-updates and global deployment, etc. Note that the second category could accommodate what is pretty widely-suspected by everyone, i.e. that the Omicrons must have a lab origin. In fact, despite authoring the best argument for the same (imo), I have become more open to the Omicron’s being an accident of natural mutation in light of my research on the other VOCs.

Anyway, back to the frustratingly nuanced parts — as shown, I am “open” to vector release as a means of transmission, but it is not something that strikes me as likely. In case this seems coy or elusive, note that I have ranked it with the outlandish notion of a natural, zoonotic origin for SARS-CoV-2. That’s right, I am still willing to at least entertain the possibility that the HIV inserts that were highlighted implausibly quickly by researchers from India (suggesting that those observations were simply laundered intelligence community broadcasts), as well as the furin cleavage site, are in fact miraculous natural accidents.2

Nature often rhymes. It could be the case that the reason SARS-CoV-2 shares epitopes with HIV is the same reason bats and birds both have wings. So, I am simply not ruling anything out. I don’t think either natural origin or zero-transmission is likely. To be 100% clear, zero transmission requires full-throttle, worldwide sequence falsification, which I am unwilling to claim as plausible. I strongly doubt it.

Shouldn’t I just denounce zero-transmission outright? After all, it could be the case that zero-transmission, a.k.a. “Infectious Clones™,”3 is like not-a-virus-ism in general another controlled opposition red herring; in which case, aren’t we better off “purging” the eco-system of this intellectual poison? Well, it’s not actually clear to me that there is an upside to such intellectual purges or not. Thus, I choose not to expend energy ruling out every potential-op under the sun, especially as doing so could blind me to future evidence that changes how likely things seem to be.

But that could change

In not taking a hostile stance toward the zero-transmission model, I may have missed some developing lines of evidence that render dismissal safe. One of these is mentioned by McCairn himself — the abrupt explosion of cases in his own Japan.

Even exceeding these explosions, of course, was the recent supernova in China. As just described in my cross-posting, “Austrian China” mentions how much first-hand evidence the recent outbreak has added in favor of unambiguous human-to-human transmission (supplementing what I would say was already conclusive evidence bar declaration of worldwide sequence falsification):

(To be additionally clear; my own first-hand experience with this world-sweeping disease was with stupendously obvious transmission from a sick person.)

So maybe my October belief matrix is in need of an update, in light of China.

Half and half

With all that throat-clearing out of the way, I can (hopefully) clearly and unequivocally state that I am half-and-half between a belief that SARS-CoV-2 is a “bioweapon home-run” (100% natural transmission and mutation), or that there has been a mix of mostly transmission and periodic amplification or “infusion” of new, lab-designed variants. After all, wouldn’t it make sense to have a system for the latter in place, since the former couldn’t be known to be true in advance? If you can keep the virus “backed up” in a DNA<>virus platform, why wouldn’t you take advantage of it?

Here I would like to show, once again, my animated gif based on Trever Bedford and co.’s mathematical scoring of various nextstrain clades for fitness over time:

And, I stand by my endorsement of Hudson’s post as an apt phrasing of why we should be at least skeptical of a bioweapons home run model; but as I noted at the time I do not go as far as him in dismissing the evidence for the same.4 It also might be the case, as Rixey notes in the McCairn stream, that Hudson is flirting with outright not-a-virus-ism. I read his arguments as essentially noncommital on the subject.

As a final point, lest it seem like I am trying to “Have my Infectious Clones™ cake and eat it too,” note that one cannot even use this type of genetic evidence to try to prove the theory of repeat release under the Infectious Clones™ paradigm, as it necessarily asserts that all of the sequencing is bunk.

How much complexity is too much?

And so now we turn to 9-11, and how 9-11 renders difficult any attempt to use complexity as a rubric for ruling out possibilities. This is an ironic problem, given that Rixey’s (entirely valid) critique of denying the bioweapons home run model is that to do so “adds complexity” — and yet in the earlier part of the stream, Richard Gage had demonstrated just how vast the “budget” for complexity on these types of problems really is.

I get the theoretical importance and utility of a “don’t add unneeded complexity” rubric. But then what is one to do about all the unnecessary complexity burdening both the official narrative and denial of the official narrative surrounding 9-11?

Describing the “9-11 Problem”

If the reader is only interested in the 9-11 in of itself, go see McCairn’s standalone version of the Richard Gage interview, which starts at 12 minutes. It is a fantastic summary of the Architects and Engineers for 9-11 Truth case (even though Gage has been ousted). The only disappointment is that Gage runs out of time to offer his thoughts on how 9-11 provides insights for our so-called Pandemic™.

My focus here is on the meta — how does 9-11 affect the reliability of using complexity as a rubric for deciding between official narrative and wild conspiracy theories?

The significance of 9-11 for this purpose might not have even occurred to people who are open to the “inside job” theory. This essentially describes me from the first news reports of “box-cutters” to about a year ago: I knew something was implausible about the official narrative, but still had no idea how much complexity was on the “inside job” side. There was a third building that didn’t even get hit by any airplanes! I literally didn’t know this!

Learning about the ridiculous amount of complexity “inside job” would require has thus calibrated my thinking about SARS-CoV-2, given the obvious similarities in broader context.

So let’s suppose, for example, you are an expert in architecture like Gage. And after a few years of accepting the official narrative — airplanes collapsed WTC 1 and 2 directly, and 7 indirectly — you become aware that there one or two tiny problems with it. In endeavoring to decide what really happened, you could approach the problem as an evidence scale:

Categorizing all the evidence as “complexity” itself simplifies things; for example, if the motion of the building does not match a structural failure collapse, then you have “added complexity” to the teddy bear — because now you need to explain why your structural collapse doesn’t produce the expected motion. Thus, whichever explanation introduces more complexity according to the Science of Building Falling and the evidence sinks; and the other rises.

The secondary result of this scale would be that you have created a device for measuring how much complexity in conspiracy on the part of the US Government is possible.

Of course, lay people are not experts, and cannot operate such a device. A lay device would essentially look like the following:

Lay 9-11 Conspiracy Complexity Measurement Device (CCMD)

Expert consensus will determine how the buildings must have fallen.

If expert consensus determines “inside job,” then observed complexity of “inside job” is clearly possible.

But what is notable about the “expert device” regarding the collapses is that it resembles other facets of 9-11 that have been accessible to common lay reasoning from the start. And these all present the same problem: there’s too much complexity on both sides.

Why is 9-11 more important here than other “wild conspiracy theories”? Well, most of the other “big ones” aren’t as elaborate as they seem. Feel however you want about the moon landings, for example; but when you look into the number of individuals required to enlist and keep silent, it is rather modest.

The amount of possible complexity that a 9-11 “inside job” would involve is on the other hand staggering. So much so that it should seem to easily outweigh anything you could put on the other end of the scale.

(However, it seems safe to assume that 9-11 will never be announced an “inside job” by the news.)

Aside: The zero complexity default

But what is notable about this proposed rubric is that most people even to this day would not agree to employ the “lay device.” They do not admit conspiracy is even possible; therefore, it wouldn’t matter if the news came out and said all three buildings were seemingly blown up (just as it did say on the day in question, but not afterward). It thus likewise does not matter if two coalitions of experts, in the airline industry and in architecture and engineering, have come to a consensus. Most people do not accept the 9-11 Conspiracy Complexity Measurement Device.

To most, conspiracies simply can’t be real, because “someone would talk.” Simply to engage in a conspiracy at all has already added too much complexity.

Of course, this argument is simply a convulsion of pure imagination; as if humans come packaged with actual rule-books that we can simply agree to look up to determine possible behaviors (which is, actually, how many “smart” people tend to think, including for biological properties, laws of nature, etc. — whatever our description of a thing is is the thing, whatever rules our description of reality implies are the rules of reality).

But besides the fact that no human alive actually knows what behaviors humans “can’t” do in concert (or at the behest of spiritual forces that “smart” people assume to have been declared impossible in the human rule-book), the fairy-tale that conspiracies are not real and common is a modern anachronism (does it even predate Kennedy’s assassination, I wonder?). Moreover, it was essentially assumed from the start that the Federal Government would subject the states to threats of the same. As Madison wrote:

I am unable to conceive that the people of America in their present temper, or under any circumstances which can speedily happen, will chuse, and every second year repeat the choice of sixty five or an hundred men, who would be disposed to form and pursue a scheme of tyranny or treachery. I am unable to conceive that the state legislatures which must feel so many motives to watch, and which possess so many means of counteracting the federal legislature, would fail either to detect or to defeat a conspiracy of the latter against the liberties of their common constituents. I am equally unable to conceive that there are at this time, or can be in any short time, in the United States any sixty five or an hundred men capable of recommending themselves to the choice of the people at large, who would either desire or dare within the short space of two years, to betray the solemn trust committed to them.5

Madison is not opining that conspiracy in the US Federal government can’t happen, nor even that it likely won’t. He is emphasizing that it could be resisted — emphasis which implies a belief that such conspiracy will happen. What is the means for this resistance? Recognition, and removal — as legislators are essentially emissaries of the individual states, not of the people per se. But both recognition and removal are easily neutered by the transfer of true law-making authority from the legislature to the executive branch, as was achieved in fits starting with Lincoln and finally was cemented after WWII.

We can recklessly assume based on this single tossed-off Federalist Paper quote that Madison’s default assumption of our modern reality would be that the US Government is engaged in conspiracy at all times; and we’ve simply blinded ourselves to the signs.

Result of the “9-11 Problem”

Obviously, expert consensus has not declared 9-11 an “inside job.” After all, did Madison describe a way to prevent the Federal Government from controlling and censoring expert opinion? No? Hm. Too bad for us.

Lay 9-11 Conspiracy Complexity Measurement Device (CCMD)

Expert consensus will determine how the buildings must have fallen.

If expert consensus determines “inside job,” then observed complexity of “inside job” is clearly possible.

oops

(What would James do, given this problem?)

But the “elevator pitch” version of Gage’s result is that the Twin Towers must have been disintegrated by bleeding-edge demolition techniques (including a custom thermite propellent, or “super-thermite”) because no other method of bringing these buildings down was even possible (and the chemical evidence is supportive) while WTC 7 was felled by traditional controlled demolition.

Which implies a budget for conspiracy complexity that is practically infinite. Perhaps, in that light, we should be glad that the Government also controls expert consensus.

Parallels with SARS-CoV-2

Again, I am not here to convince readers in any particular way regarding 9-11, since I have no authority to offer on the subject. I merely seek to invite comparison between 9-11 and SARS-CoV-2 as two subjects in which complexity burden serves poorly for navigating reality.

Rixey mentions the strongest argument in favor of “bioweapon home run,” which is that it simplifies. In other words, if your goal was to make a lot of people sick, you would expend more effort into developing a competent virus than to simulating one. The former would yield greater return on investment.

I strongly agree, and have made exactly the same argument for why I lean toward the virus being the agent of disease.6

I am open to theories that the virus — the thing lighting up PCRs and NGS with the same-ish genes over and over — was in this case not the invisible “something” actually causing symptoms. That there were shadowy, deep-state ops poisoning people by other means. But I am not convinced by them: Attaching a human-compatible spike receptor binding domain and furin cleavage site to a coronavirus backbone is a good way to create the results observed.

And as mentioned above, the Omicron wave in post-zero-Covid China may be reason for me to revisit and finally reject my non-dismissal of zero-transmission (the above quote being from early October).

But is making a functional pandemic virus (not just making something that looks impressive in a lab animal model) actually easy? Or does it just add more complexity on the other side of the scale?

Humans still can’t even make proteins. (When we need a DNA polymerase that can withstand the high temperatures of PCR assays, we have to steal them from bacteria that evolved to withstand heat.) Viruses are more complex than a single protein.

I get that the humanization of a sarbecovirus receptor binding domain works on paper; but viruses depend for their success in host niches on more than just binding to a receptor. I also get that it seems like certain individuals have been working on coronaviruses for decades; but it’s not clear whether this is an argument in favor of their having been successful, or deciding simply to rig the “tower” of Earth with an unthinkably complex, seemingly impossible delivery system.

Overall, I think we may still be in the early era of assembling the full picture of complexity on both sides of the question. We are like Gage pulling over in his car in 2005. There remains much more to be determined.

If you derived value from this post, please drop a few coins in your fact-barista’s tip jar.

A fad which, to be clear, post-dates my non-dismissal of vector release (see footnote 6).

These features are further detailed and cited in my annotated spike protein map.

As stated in my previous post, I chose to avoid the term “infectious clones” in my first review of the synthetic origins paper that kicked off this controversy, on October 22, i.e. before Infectious Clones™ became a short-hand for universal vector release.

This choice was coincidentally because I thought the term has misleading connotations from go, not because I now seek to obscure the significance of the (misleading) term.

I used “DNA<>virus platform” specifically because that describes what the system is, and makes clear that authentic, “real viruses” are the product of these systems.

For an example that I chose based on “can’t throw a rock without hitting” methods, see:

Neumann, G. et al. (1999.) “Generation of influenza A viruses entirely from cloned cDNAs.” Proc Natl Acad Sci USA. 1999 Aug 3; 96(16): 9345–9350.

We report here the generation of influenza A viruses entirely from cloned cDNAs.

I made these choices even though it was explicitly my goal to highlight the numerous ramifications of these DNA<>virus platforms. Namely:

That the emergence of a genetically engineered virus does not require some elaborate production of “tinkering” innocently with nature until an oopsie happens; it is as simple as ordering a sweater on Amazon. Therefore, the Wuhan Institute of Virology should not be presumed the origin of this virus given the lack of direct evidence; and circumstantial evidence pointing toward US foreknowledge and release.

It should not be assumed that the virus has sustained and spread entirely through natural transmission, as generation of infectious SARS-CoV-2 from “backup” copies (the DNA< portion of the construct) as well as “updates” to the code are trivial.

The text of my cross-post is only preserved in the original email (again, this was character-limited, forbidding me from being as nuanced and delicate as I would liked to have been):

Hudson articulates nearly perfectly why the idea of a functional "novel" virus is so outlandish: namely, the "job" of a virus is too complex and interconnected. He also goes further than I would in how to apply that premise to the evidence regarding SARS-CoV-2.

I have become convinced that the virus must be either 1) functional at injuring people*, implying successful biowarfare, good job Baric 2) somehow being directly deployed in high dose/purity.

Thus, I endorse Hudson's argument over JJ Couey's (which leans on an idea that no RNA viruses can sustain genetic fidelity, which isn't true) for why we should keep at least a grain of skepticism regarding viral spread vs vector delivery.

*Examples in my comment

Well there's many improbable events in life that seem coincidental. When there are too many coincidences, you know you are dealing with something that is intentional or premeditated. Human nature likes to ascribe causes to observed patterns of events.

1. Sequences of CTCCTCGGCGGGCACGTAG appearing in 2017 with DoD/CCP-funded papers with Moderna using it as the baseline sequence for their vaccines, things which have an extremely improbable chance of mutating randomly with functional epitomes

2. The likelihood of hiring X amount of people while having Y facilities ahead of time with Z foreknowledge to produce over billions of vaccines in a short-matter of time is not unlike years of planning of maneuvering warfare troops and facilities before planning in all out-war

3. Actual footage of police-ops and government-funded perception-management crisis actors at certain evidences, coincidental appearances of same media reporters at 9-11, Boston Bombing and Capitol Riot https://www.bitchute.com/video/sBh1JE6KhoPz/

https://youtu.be/uH3rCGdoBB0?t=994

Actual lawsuits against ballot manipulation https://www.brennancenter.org/sites/default/files/2021-05/1-20-cv-11889-MLW%20-%2011.06.20%20-%20Plaintiff%20Amended%20Complaint%20Against%20Defendants.pdf

The more evidence there is towards government-sponsored/corporation-sponsored interests, the more likely one should shift their bayesian prior towards the counterfactuals of official narratives.

4. Governments historically have been known to change statistical data by changing the methodology, the scales, or reporting by omitting information, or simply discontinuing altogether. We can see this with inflation indexes, interbank-lending, monetary supply. Banks re-hypothethicate all the time, but they apply netting, compression and other statistical manipulations to decrease the chance of noticing something being wrong. We can observe this in real-time with the definition of vaccines, pandemic, etc all changing over the years, to be 'technically correct'. Reclassification schemas are most often used.

5. Cui bono is a simple starting point for aligned interests. Not all actors or entities need to know everything about a plan, as long as certain places gain enough monetary incentives or sociopolitical power, they will abide to it. One can see the linkage of funding between all respective organizations. Politicians benefiting from lockdowns by selling stickers, posters, etc.

6. The plausibility of implausibility of something is partially determined by the amount of information that you encounter contrary to your viewpoints. If you only engage with the mainstream media, then technically, you will most likely be deceived because although they might provide factually true information, it is usually misleading (i.e. relative reduction of risk vs absolute)

7, There is an infinite amount of playbooks, tabletop games and PR handbooks available at think-tanks and NGOs. If you look at their 'hypothetical worlds', 99% of the time you will find an eerie match with the circumstantial timeline that is occurring at present-time. While it may not be conclusive evidence that events are premeditated, it lends more credence to the fact that there are geopolitical entities that have a solid comprehension of behavioral sciences that can affect the population. And that such entities are significantly more intelligent in predicting the behaviour of populations following a coordinated response, than by simple or arbitrary chance.

https://drive.google.com/file/d/1VtNrCBFInznU5Mns6w5fTnTgYKgJKFc1/view https://files.catbox.moe/kkmi6a.pdf https://www.centerforhealthsecurity.org/our-work/pubs_archive/pubs-pdfs/2017/spars-pandemic-scenario.pdf

https://groups.csail.mit.edu/mac/classes/6.805/articles/money/nsamint/nsamint.htm

http://files.catbox.moe/pu31u7.pdf http://files.catbox.moe/m8gerh.pdf

http://files.catbox.moe/0sv89v.pdf http://files.catbox.moe/sfk3km.pdf

http://files.catbox.moe/d9cfgh.pdf http://files.catbox.moe/tfxhbl.pdf

http://files.catbox.moe/8w33ka.pdf http://files.catbox.moe/0tjvc9.pdf

http://files.catbox.moe/eibpoc.pdf http://files.catbox.moe/0gxhsl.pdf

http://files.catbox.moe/u030sk.pdf http://files.catbox.moe/kkmi6a.pdf http://files.catbox.moe/k7te8l.pdf

8. While outrageous claims of 'bodily modification, graphene, etc' are not plausible simply because it would incur a greater incidence of harm than predicated, there is significant evidence that the intentions to transform the populace into controllable entities either by socio-behavioural, or technological controls are likely to occur eventually

https://horizons.gc.ca/en/2020/02/11/exploring-biodigital-convergence/

https://assets.publishing.service.gov.uk/government/uploads/system/uploads/attachment_data/file/986301/Human_Augmentation_SIP_access2.pdf

https://futurescape.chathamhouse.org/

And what may seem to be unbelievable in the present has happened in the past (i.e. media-deception regarding smoking, thalidomide, radioactivity, mercury and other medical interventions)

The easiest way to deal with an innate prior of 'unlikely that authorities in power would intentionally or unintentionally mislead the public' is counterintuitively to study history, whether it be the history of laws, the history of finance, or history of whatever intuitions in question have possessed or maintained such powers. A common schema that is often used is "present the counter" (we saw this early in the supposed covid-19 epidemic, where claims of XYZ never works, pandemics typically die out because virulence increases as a function of decreasing morbidity/lethality -- which is true, even for 'previous vaccinations' -- most transmissible pathogens incur higher evolutionary energetic costs if they kill their host or require more mutations to adapt to generalized environments like species-to-species jumps), introduce the disturbance (few cases), amplify the disturbance (lockdowns), bait&switch (promise of freedoms), denial (of possible AEs), and eventual acceptance/confirmation (controlled) -- now we get to observe "admissions" of negligible effectiveness and/or potential harm for sub-populations (like young males).

Ultimately, I feel like the dialogue on most of these topics is hopelessly flawed by a desire to simplify and classify. I mean, what does “inside job” even mean? The government did it? What the hell is the “government” anyway?

As always, I feel as much as we would like to construct overarching narratives, most events are too complex to do so. All one can hope to do is ask very specific questions to find particular answers. With 9/11, WTC7 stands out like a sore thumb. I might be able to buy into airplanes and jet fuel, combined with a unique (and ridiculous?) architectural configuration leading to the pancaking effect for the Twin Towers, but there’s frankly no reasonable explanation why I shouldn’t believe my eyes when it comes to 7 looking like a controlled demolition. As far as the grand scheme of things goes, that means the government (whatever that is) and it’s authorities are not completely accurate in their account of the events of that day. Which means they’re not to be trusted entirely. Also, water is wet.

COVID and the pandemic is even a trickier nut to crack because it’s not a singular event. Much of it is, by nature, unable to be seen. That means it doesn’t even require a conspiracy of deception to create confusion. It just needs ignorance, hubris, profit motive, and a willingness to engage in storytelling. The latter is perhaps the most powerful. Ultimately, we’re all “conspiracy theorists”, mainstream and alternative sources alike. It’s so hard to not take something that we’ve deemed fact and extrapolate dozens of additions conclusions onto it, and most of the time we’re completely unaware that we’re doing it. And unfortunately, this method of narrative construction is the only way to even build consensus, because you can’t agree with something if nothing is being asserted. So to me, aside from on the most specific inquiries, consensus will always be wrong.