A small study looked for signs of increased antibody response to syncytin-1 - a protein vital for successful pregnancies - in 15 female subjects who received the Pfizer Covid-19 vaccine in Singapore. What they found, to their surprise, was an immediate and sustained increase - in all 15 subjects. The authors went on to conclude that their own findings were not “significant.” But if an increase in antibody response to syncytin-1 is not significant, why did the authors look for it in the first place?

Recipients of the experimental Pfizer and Moderna mRNA Covid vaccines, and the Johnson & Johnson experimental vector Covid vaccine, will be relieved to hear that they passed all of the rigorous, standard FDA-required reproductive toxicity trials1. They were extensively tested for relevant impact-signals in male and female fertility, “parturition” (birth), lactation, fetal morality (pre- or peri-implantation loss, early or late resorption, miscarriage, stillbirth, neonatal death, peri-weaning loss), dysmorphogenesis, alterations to growth, and offspring functional impairment (locomotor activity learning and memory reflex development, time to sexual maturation mating behavior, and fertility) - all in the span of a few months in 2020, and they passed with flying colors!

Wait, that’s not quite right. The novel vaccines passed none of the FDA-required reproductive toxicity trials. They had no reproductive toxicity trials. Because vaccines do not undergo FDA reproductive toxicity trials, because they are not “drugs” - even if they are based on a novel lipid-nanoparticle platform that was developed for the delivery of drugs. Well, that might seem troubling, but - but! - the Pfizer vaccine, alone, did, after certain concerns about protein homology were raised, undergo a rigorous, 15-patient study (15!) measuring changes in blood plasma antibody response to a critical placental cell-fusion protein (which is also implicated in psychological regulation) - and it found… well… changes. In every subject.

But don’t worry, because these changes are not “significant.”

Such, more or less, comprises the account of events that prompted my interest in the study from Singapore. That account begins at 1:02:40 of the Highwire interview2 between host Del Bigtree and outspoken Covid mitigation- and vaccine-skeptic, Mike Yeadon. Biologist, pharmacologist, former longtime VP and Chief Scientific Officer of Pfizer Allergy and Respiratory research, and retired entrepreneur, Yeadon is a respiratory-virus conventional wisdom hardliner, who dismissed and dismisses still any notion of SARS-CoV-2 as a meaningfully “novel” coronavirus. Even if the reader is inclined to more flexible thought regarding the nature and “novelty” of SARS-CoV-2, nothing in Yeadon’s predictions, criticisms, and overall wholistic priority-weighting-model of the liberal West’s responses to Covid has failed to hold up in any significant degree, and the interview makes for an exceptional, moving view.

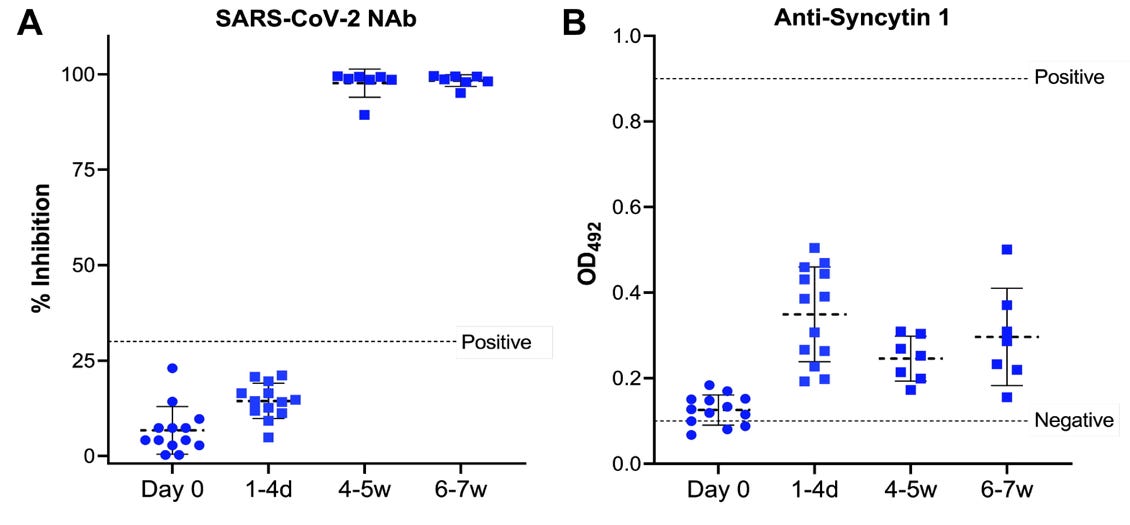

Yeadon, after prompting, provides his host with an “unrehearsed” assessment of the Singapore study. First the setup: It is established that the aim of the study was to confirm a pre-defined bias. In December, Yeadon and his collaborator Dr. Wolfgang Wodarg raised concerns over reproductive toxicity in the Covid vaccines, speculating that the mRNA Covid vaccines might, owing to molecular homology, induce antibody sensitivity to syncytin-1. The study aimed, explicitly, to demonstrate that these speculations are totally dismissible “vaccine hesitant” misinformation. Then, the “real time” result: Yeadon flatly affirms his conclustion that the study did observe a marked increase in plasma sample antibody binding to syncytin-1 post-vaccination. He adds, regarding the way the study graphs its own results, that after reviewing the study twice it was apparent that the “positive” value drawn high above the plasma results showing an uptick after vaccination was “completely arbitrary”: It has “no basis.” The study’s plotting of the relevant test results is displayed in the video. Here it is, from the study itself:3

To my unenlightened eyes, the signal looks clear and dramatic: the vaccine is prompting unmistakable increases in blood plasma binding response to the vital placental formation protein syncytin-1. On the other hand, it could be nothing. Hey, look how high up that “positive” line is! Also: how am I supposed to compare a “% Inhibition” result with a “OD” result (spoiler: they are not supposed to be compared)? Intrigued, I set out immediately to read the study in question and either 1) acquire a low-cost understanding of the results or 2) give up on caring, and move on to other things. Instead I encountered a document so inscrutable and yet intriguing - so tantalizingly just out of reach of my comprehension - that I would spend multiple days trying to figure it out.

Reality signifies her admiration for the most valid of human intuitions with mild, equivocal critique. The conscientious contractor, on the home renovation reality television show, punctiliously nominates some minor, aesthetically offensive post or brace to vanquish with a mallet, only to discover a raging apocalypse of wood-rot within the skeleton of the purchased house, at precisely the worst time, in precisely the nick of time. So too does the Yeadon and Wodarg pick-axe, casually hurled forth to “clear away” any possibility of cross-reaction between a hypothetical SARS-CoV-2 s-protein antibody with syncytin-1, uncover the disquieting hints of a more generic and instantaneous post-Covid-vaccine anti-syncytin-1 immune response. Just what is it that might linger in the bones of this novel dwelling, into which much of humanity has already moved?

We will arrive, before the end of this essay, upon the reasons I interpret the Singapore study as having raised such a question. But, before preceding into a more detailed interpretation of the findings, we should temper our expectations.

To recap, the study was prompted by item XI of Wodarg and Yeadon’s December 1, 2020 petition4 for a stay of action to the European Medical Agency, which ventured:

Several vaccine candidates are expected to induce the formation of humoral antibodies against spike proteins of SARS-CoV-2. Syncytin-1… which… is responsible for the development of a placenta in mammals and humans and is therefore an essential prerequisite for a successful pregnancy, is also found in homologous form in the spike proteins of SARS viruses. There is no indication whether antibodies against spike proteins of SARS viruses would also act like anti-Syncytin-1 antibodies. However, if this were to be the case this would then also prevent the formation of a placenta which would result in vaccinated women essentially becoming infertile. To my knowledge, Pfizer/BioNTech has yet to release any samples of written materials provided to patients, so it is unclear what, if any, information regarding (potential) fertility-specific risks caused by antibodies is included.

While (Yeadon recounts in his interview) this was met with a wave of derisive backlash, in an era of epistemic anarchy the outside observer has few tools with which to judge Wodarg and Yeadon’s speculation or the counter-arguments on their theoretical merits. Yet there is no apparent disputing that the Singapore study is the first and only known attempt by anyone - including the manufacturers of the vaccines - to check whether an antibody response is of the type predicted by item XI is detectable; and no apparent disputing that the study finds that it is detectable: it literally detects it. So, score one for Dr. Wodarg.

Both Wodarg and Yeadon have been vehemently critical of the artificial, media-constructed “pandemic” narrative, and the political and societal responses it has made possible; both seem to have been driven nearly mad by society’s self-inflicted Covid panic madness. But while Yeadon holds unwaveringly to conventional understanding of coronaviruses to frame a critique on the “novelty” narrative surrounding SARS-CoV-2, Wodarg strikes me as inclined to the Ian Malcolm approach, his colorful blog evincing a reflexive pessimism toward the capacity of the human mind to comprehend something as variable and interconnected as the virus-immune-response ecosphere. These facts may all be markers of genius, insanity, or both: but they tell the observer nothing about what should be concluded regarding the theoretical impact of the Covid vaccines on placental formation. Yet the observer need not dwell on the theory, if she can rely on the trial.

Yet that very subject - the reliability of the trial - is quite hard to make out. First, it was incredibly small. It included only 15 participants, of only three ethnicities (4 Malay, 4 Indian and 7 Chinese), all in the same workplace (the Singapore National University Hospital), and of markedly advanced mean age (40!); 2 were not in the initial group of 13, but were added after being discovered to be pregnant after their first dose; 1 was present in the initial set that did not return after for the post-3-week, post-2nd dose tests; and no additional follow-up tests were conducted despite a failure of any of the remaining subjects to return to “negative control,” i.e. their pre-vaccine baselines of syncytin-1 binding, at week 7.

At each dose-relative timestamp - right before the first dose, immediately or a few days after the first dose, and (now in the wake of the second dose) four to seven weeks after the first dose - the study authors sampled some of each subject’s blood, spun it around to get rid of the blood cells, and poured the leftover plasma into its own unique well on a plate containing syncytin-1, to observe the degree to which the plasma contained anything that would bind. They recorded the resulting observations and made a chart out of it. They leaned over things, and murmured “hm” in contexts which suggested high discernment.

The study provides no absolute calendar for subject dosage, so absolute synchronicity - that all the recipients received their first dose on the same day - cannot be ruled out, which means a totally coincidental external, environmental factor in the hospital system, or Singapore, or Earth, promoting increased anti-syncytin-1 response among ~40 year-old female central and southeast asian healthcare workers cannot be ruled out either.5 Nor is it impossible that all the subjects, prompted by a fad within the hospital staff, self-administered some other substance at the same time without reporting it to the authors.

Finally as regards the limited scope of the study, it only involves the Pfizer vaccine. While the logic of Wodarg’s s-protein / syncytin-1 homology speculation necessarily implicates any mRNA, vector, or protein subunit Covid vaccine, this study on its own cannot validate the mechanism of its observed result. Because it does not include any other Covid vaccines, it does not rule out a mechanism based on the Pfizer vaccine’s particular excipient cocktail (rather than on the mRNA package, or the epithelial cell generation and expression of SARS-CoV-2 s-protein that it induces). This, however, thoroughly does not disfavor acting as if all current Covid vaccines are implicated by the study results, until further information is available. At the same time, there is the “anything’s possible” possibility: that these results were just an absolute freak event, that will never be replicated, and this entire essay will have been a waste of time. This possibility, however, also does not disfavor acting as if the results could be replicated, for now.

Moving from scope we encounter the vagaries of measurement, which center on the particular qualities of a testing platform called ELISA, but which extend to three different realms of epistemic uncertainty. To begin with the end, the study does not employ any industry-standard threshold or score-set for translating “measured syncytin binding” to “clinically significant risk of reproductive or psychological disease.” Although other studies have used ELISA-measured syncytin-1 binding to suggest links between antibody sensitivity to syncytin-1 and schizophrenia6 (yum!), the science is in its infancy (no tragically appropriate pun intended). There is no agreed standard for how much binding constitutes evidence of reproductive or neurological toxicity: a point which will haunt much of our analysis below.

To bridge with the middle, translating particular Optical Density results into a non-relative quantification of the presence of any particular antibody in a sample, exceeds the limits of the ELISA platform, as will be detailed below.

And to end with the beginning, no industry standard exists for calibrating a home-made ELISA test specifically for the context in which this study finds itself: plasma antibody detection. One researcher’s antibody ELISA result cannot be compared to another, as all of the choices each researcher makes regarding the multiple dilute, soak, incubate, fix, wash, and stop steps affect the “exposure” of the ELISA plate. While a highly experienced researcher may be able to reconstruct from the recipe recounted by this or any other particular study an accurate guess of how high a concentration of a particular antibody is contained in the plasma which produced a particular Optical Density result, the lay reader is left in the dark.

Thus the authors of this study, after curtly jotting down their ELISA process, can leap straight to naked assertion of the (non-) “significance” of their own results. The intervening calculations of

Optical Density x Trial ELISA Exposure Factor = Antigen Concentration

Antigen Concentration x Statistically-Established Toxicity Factor = Clinical Toxicity

either exist only within the subjective consensus of highly specialized experts, or do not yet exist, or can not exist. So what is the reader to make of the several naked, bizarrely absolute assertions the study goes on to drop in our lap:

[No study participants] had placental anti-syncytin-1 binding antibodies at either time-point following vaccination… [!]

COVID-19 vaccination with BNT162B2 did not elicit a cross-reacting humoral response to human syncytin-1 despite robust neutralising activity to the SARS-CoV2 spike protein…

Our work directly addresses the fertility and breastfeeding concerns fuelling vaccine hesitancy among reproductive-age women, by suggesting that BNT162B2 vaccination is unlikely to cause adverse effects on the developing trophoblast, via cross-reacting anti-syncytin-1 antibodies…

And how do they square with the clearly visible post-vaccine rise in the study’s own chart in Figure 2-B?

Nothing, and they don’t.

Buried right in the middle of the aforementioned curt, technocratic account of the particular ELISA methodology employed by this study - as if to ward off attempts by outside readers to perceive the arbitrary nature of their conclusions - the authors acknowledge that they are, in fact, flying utterly blind with regard to the calibration of their tests and even more-so with the implications:

Oh. Ok. There is no data on clinically-significant thresholds of anti-syncytin-1 antibodies. That sounds like Science Speak for, “no one on Earth knows how much of this will make you unable to have a baby that is not dead.” And anti-syncytin-1 antibodies were found to increase in all 15 subjects! Then, why do the authors claim to have determined that the elevated syncytin-1 binding found in their own tests in every subject is not clinically significant?7 What are we missing?!

It’s time to have a talk about ELISA.

8Lest we imagine that the modern era has reduced even the field of medicine into a digital hell of precisely-regulated, algorithmically self-calibrated nano-molecular logical arrays, there is still ELISA. If the five letter acronym is intimidating, it shouldn’t be: the L stands for ligand (not really, it stands for “linked”), which is another word for “sticky molecule.” The construction as a whole stands for “sticky molecule trap” (not really). A relic of the 1990s, ELISA more closely resembles the cutting edge of the 1850s - wet plate photography - but with a mixed order of operations. Plates are made wet; chemicals do stuff; plates get darker; a light is shot trough the plates.

Yet if the brute simplicity of the ELISA concept is reassuring, it shouldn’t be. It’s a byzantine array of conceivable operative structures which are themselves individually byzantine in the variability of their chemical procedures. Just as there can be no one-size-fits-all overview of the chemical processes of photography, or cooking, or painting, there can be none for ELISA. It is an art, not a science. Thus to escape from a hyper-specific account, such as the one provided by the study authors above, we must speak purely in crude, overly broad terms.

In ELISA, an antigen, which is to say a protein, which is to say a sticky molecule, is glazed over a bunch of upside-down bubbles of plastic. It may be expected to affix to the plastic directly, or maybe it is being added to a preexisting glaze of, well, antibody - but the end result is the same. Antigen glaze. The plate may at this point be shipped off for broad use along with tailored calibration solutions that end-users can use to easily see when they have messed something up: cool! Or, this first part can all be done from scratch, in the researchers’ own lab. Now that the plates are ready, either a solution of antibody, or a sample of some kind of liquid (like, that used to be inside of a person) within which one wants to detect or “measure” the presence of an antibody (or either of those things combined with a competing protein, to see how well the given samples block binding between the plate and the protein: this is called “Crazy Style”9) is poured over the bubbles. Next the bubbles are washed for the third or fourth time. At last an enzymatic marker, one which may additionally discriminate for particular types of antibody or not, is poured over the bubbles: the marker attaches to whatever attached to the the thing that attached to the thing that attached to the thing, and now you’ve got darkness, a.k.a Optical Density.

Before we move on to that, we can already observe and remark on the defining characteristic of the ELISA method: it is primarily good at revealing relative differences, not measuring absolute concentration. In so far as ELISA can demonstrate the former, it is only in the sense that it can return a result of near-zero: the system only has one absolute binary, which is “none/some.” And even those could be false results, caused by mistakes in the process or by poor process design. One way of describing the none/some application of ELISA, is “qualitative,” as in the quality of having some of something at all.

But, if you take a lot of samples together, subjected to the same specific, consistent ELISA process and see clear differences in binding response to a specific antigen10 among those samples, you have a clear signal for ranking the samples according to antigen reactivity. This method of using ELISA can be called “semi-quantitive.” Rather than reveal an absolute figure for how high the concentration of an antibody is in a given sample, it can “quantify” the relative differences between samples. The world is starting to feel comprehensible again, no? Don’t get used to it.

At the end of the process of dousing their plates with blood and other various magic potions, the authors of the Singapore study describe the process of measuring darkness. As always, they are not overly florid:

OD492 was analysed in the Sunrise microplate reader.

Ah. Of course. The Sunrise microplate reader. Who hasn’t analysed a bit of OD492 in a Sunrise microplate reader once in a while, to blow off steam? To credit the authors, some context was already provided in the beginning of the paragraph: OD492 is the Optical Density, as observed by the authors’ Sunrise microplate reader, when 492nm wavelength light was shot through the ELISA plates. Alas, our beautiful and wild, “artistic” ELISA process has been flattened and converted to a digital and highly mathematical measurement.

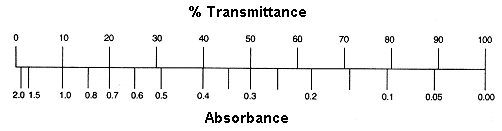

A given industry can have any system for measuring opacity that it wants to, but Optical Density, which is a mathematical formulation of the “percentage of light transmitted,” is more useful in many contexts than the raw percentage itself. Optical Density - the translation of raw percentage values through a mathematical formulation, and plotting the result - allows very “low” opacity measurements to share space with very “high” opacity measurements, which are 6 orders of magnitude apart from each other, in a single chart that only uses 3 orders of magnitude and doesn’t require zooming in or out (or printing onto four hundred pieces of paper) to visualize. It is not, however, any better than raw percentage for explaining reality. If one substance is allowing 50% of light through (“transmission”) and another is allowing .1% of light through, nothing intelligible is expressed by conveying the corresponding Optical Density values:

Light transmission: 50% / Optical Density: .3

Light transmission: 10% / Optical Density: 1

Light transmission: .1% / Optical Density: 3

Optical Density mathematically obscures the light transmission value in order to make charts more useful: so that for all values of light transmission less than 10%, the chart “self dilates”. The Optical Density math makes the difference between a .1% and .15% transmission, and a 10% and 15% transmission, visible on the same chart. That’s all.11

For all values of light transmission between 10% and 90%, the Optical Density mathematical formula behaves more like pig-latin. It produces a nearly-linear, but semantically scrambled result:

As the product of a mathematical formula, Optical Density imposes no constraints on the capacity to measure, compare, and interpret light transmission values. However, the Optical Density values of the Singapore study all fall within the easily-compared, linear range between 10-90 %, which makes our still-pending comparison and review much easier. There is a reason for that. The reason is that the ELISA process of the Singapore study was calibrated to make comparison possible.

Excessively dark plates, those which would produce Optical Density values greater than 1, are not ideal for comparing with one another. For one thing, in the upper range of darkness, representing levels of light transmission below .1%, even a Sunrise microplate reader has significant accuracy limits, so measured results cannot be used for detailed analysis. But even more importantly: once the antigen glaze in a given plate has been fully binded, what’s supposed to happen to the rest of the antibodies? They will not make it to the reader. One cannot compare how many “extra antibodies” two samples had; they were not measured. Thus a plate that has been made nearly opaque before going into the Sunrise microplate reader has been, we could put it, “overexposed.”

This can be done intentionally: it represents the ELISA calibration one would choose for a “qualitative” test. You don’t want detailed analysis. You just want a bright red warning sign. In other words, if you just want to see if there is some of something there, you apply minimal dilution to your sample so that the ELISA plate will darken as much as possible.

But if you want to compare the relative amount of something between multiple samples, any calibration of your ELISA process that puts you out of the 10 to 90% light transmission range has been improperly designed: it is either under-diluted (“overexposed”) and differences between the samples will fail to show up because the plates have been saturated before all antibody had a chance to bind; or it is over-diluted (“underexposed”), and background staining from the other solutions, or small impurities, are going to significantly flatten the observable difference between samples.

This contextualizes the “positive control” employed by the authors of the Singapore study: they calibrated their dilution of pure, rabbit-grown anti-syncytin-1 “control” antibody, and the time of their soaks, until it stained their ELISA plates so that the Sunrise microplate reader spat out: “.9.” To return to the photography metaphor, they calibrated the brightness of the signal until it produced a pre-determined level of exposure on the plate: they chased the film with the lightbulb. This “get the result you wanted” process - what is misleadingly labeled in ELISA terminology as defining a “control” - is useful not for experimental conclusions, but rather for quality control. If any test samples share a plate with previously calibrated “positive control” that does not read .9 in the Sunrise microplate reader, you throw the whole plate out and run the thing back from the start, because it means Kyle dropped ketchup on the plate again. The positive control doesn’t tell you the significance of your sample’s result: it tells you that the difference between a sample on one plate can be reliably compared with the sample on another plate, because the exposure was consistent.

That’s it. That’s all the “positive” line represents in Figure 2B. It means, literally, “Here’s .9!” To forward the pre-defined “positive control” Optical Density value as a maker of clinical significance of the comparative antigen binding activity of a human plasma sample is beyond insane. The authors prepared their home-made syncytin-1 antibody plates, and found that a 1:50 dilution of human plasma did not result in as much binding as the 1:250 dilution of pure anti-syncytin-1 calibrated specifically to produce a .9 result on their plates? Ok, so?

Let’s be clear: The question the authors sought to answer with their ELISA test was not:

“Will these blood samples score a .9 on the ELISA plates we prepare in-house with syncytin-1 ordered from MyBioSource, San Diego?”

Because that question would be stupid. Instead, it was:

“Will subject plasma bind more after vaccination than before?”

The answer to the second question is yes. The answer to the first question is no. The authors looked for the second answer, found it, and reported the first in its place.

Just how much of a (relative) difference did the authors find between the pre-vaccination and post-vaccination syncytin-1 binding activity? That’s hard to say, because it is relative. Having borrowed an ELISA industry term to imply things that should not be implied about their “positive control,” the authors make a feint to proper experimental connotation with regards to their “negative control.” They are portraying the Optical Density readings found on day 0 as baseline, which is not actually a “negative control” in the ELISA industry sense. An ELISA “negative control” is the equivalent of just filling one or two bubbles on the plate with water, or with diluted-to-the-extreme antibody, to serve as a warning if the plate becomes overexposed or contaminated: again, the result of which must be to throw the plate out and try again, not to form meaningful conclusions about the results. The authors either did not employ a true “ELISA negative control” (an extremely diluted version of their positive control, to flag for overexposure) or did not mention it. Nor is it actually a “control” in the proper experimental sense. In fact, the study neglected to include that type control - a set of Singapore University National Hospital workers who did not receive the vaccine at all - as well. Such a step, as mentioned before we took our deep dive into the results, would have been useful to rule out any coincidental environmental factor.

It does appear, however that the authors used the Day 0 plasma - the “negative control” for one key step: to calibrate the dilution factor and soak times of their plasma samples for the ELISA test as they had constructed it. We can conclude this, because either the very first dilution/timing combination they chose conveniently happened to produce a baseline, pre-vaccine binding result in the bottom of the useful ELISA “semi-quantitative” range; or they got results that were too dark or too light, and tweaked their approach until they arrived at the same place: ~.1 optical density. Again, this leaves the “positive control” as totally meaningless for the results of the study. If it had required 10 times more or less dilution of plasma to calibrate the Day 0 results to ~.1, the “positive control” would have remained in the same place. At the same time, if the authors had decided to dilute their plasma samples to half or a tenth of the factor they chose, the resulting Optical Density results would have shot up to the top of the chart. For a semi-quantitative ELISA test, the plates and the samples must meet somewhere in the middle. The positive control does not help determine the proper sample “exposure” to achieve that meeting; it is determined by the chemical composition of the plate, the chemical affinities of the antibodies being detected, the potential magnification effects of the chosen marker chemical or of intermediary marker-antibody proteins, the temperature of the lab: everything but the positive control.

With all of that out of the way, what can we now determine about the results?

What does it tell us, in other words, that the Day 0 plasma darkened the Singapore study’s ELISA plates so that a mean of 75% of light was still transmitted; the Day 1-4 plasma darkened the plates so that a mean of only 45% was transmitted; that, after the second dose, among the half of the participants tested on week 4-5 only a mean of 57% was transmitted; that among the other half tested on week 6-7 only a mean of 51% was transmitted; and that in the two samples with the highest binding response after vaccination, only 32% was transmitted?

It tells us the vaccine promoted binding!

Thus we have come all this way, examined the myriad intricacies of an entire art, and of the bizarre mathematical representation used to quantify that art. We’ve laughed, we’ve loved, we’ve analysed in a Sunrise microplate reader. All just to verify what intuition would have sufficed to discern:

The Singapore study proved that Dr. Wodarg’s warning is valid.

Were the authors, after having observed an incredible and unmistakable signal for what the hypothesis had set out to disprove, bent on deception? The evidence is not weak. Why, otherwise, the misleading use of the positive control Optical Density value as the threshold for anti-syncytin-1 ELISA result “significance;” and the reinforcement of this heavy-handed standard within the results chart, in the form of the dotted “positive control” line? (Where was your threshold again? .9? I can’t picture where that would be, could you draw a line?)

Similarly, why the use of the “% Inhibition” value for vaccine-induced response to SARS-CoV-2, rather than a custom ELISA Optical Density value for s-protein binding, so that the results could be compared head-to-head with the syncytin binding tests? Would not this have been a better design decision? The authors, it turns out, assert that they used “% Inhibition” because they were told to by the “manufacturer’s instructions” of the generic test they deployed - the GenScript SARS-CoV-2 Surrogate Virus Neutralization Test Kit. GenScript abbreviates this product as sVNT (“GenScript: Make Research Easy”12) According to those instructions, allege the authors, every Inhibition result equal to above a certain threshold - 30% - is deemed a positive (30% Inhibition! Bam! We did it, baby!); every result below a negative. In other words, they had to measure it this way.

The reader might now ask, did the researchers use a clinical SARS-CoV-2 antibody test kit, one that was not capable of more precise results, because it was all they had on hand? No: the GenScript SVNT is designed for research and is not to be used in “diagnostic procedures.” In fact, ladies and gentlemen, the top-of-the-line, Made In USA, Genetic Age GenScript SVNT is suitable for both what it describes as “qualitative” and “semi-quantitative” applications, the former intended to discern in the strongest terms possible between “none/some” and the latter intended to assess relative amounts. Kids, your parents wished they could study antibodies with a test like this!

Wait. This should sound familiar to us. Could it be… that the top-of-the-line, Made In USA, Genetic Age GenScript SVNT is… an ELISA test?

[GenScript SVNT Summary:] 96 well ELISA format test with ACE2 protein attached to the plate and HRP-labeled RBD used for detection13

And so there it is: Plates are made wet; chemicals do stuff; plates get darker; and it all ends, once again, in a Sunrise microplate reader (“Sunrise microplate readers: What happens in a Sunrise, stays in a Sunrise.”) We can therefor revisit Figure 2 and imagine we understand perfectly what we are seeing: an intentionally high-exposure "qualitative” ELISA test, the result of which has been intentionally and misleadingly reverse-translated from “Optical Density” back to “% [of light] Inhibition,” side by side with a standard, balanced-exposure “semi-quantitative” Optical Density ELISA result.

In fact, the story is more complicated than that. Poring through the innards of the GenScript SVNT user protocol manual reveals that “inhibition” is the product of a formula useful for translating “Crazy Style” ELISA test results, where more binding indicates less antibody efficacy, into a scoring system where the higher numbers = good.14 We could decode the methodology further in order to contextualize the low “Positive” baseline, but it would not contradict in a significant way what was suggested above: the authors used the GenScript SVNT in qualitative mode. The test, and the math used to convert a calibrated cutoff ratio to a percentage value then displayed on Figure 2A, were designed to find results close to 100. In Figure 2B, the home-made ELISA test for syncytin-1 binding is designed not to. In Figure 2A, what is being examined is “is there any of this at all?” In Figure 2B, what is being examined is “how do different samples rank relative to each other in amount of this?”

The finding in 2B is unambiguous, and dramatic, as concluded above, although the significance is unknowable. And, when we cross-reference with the results of the GenScript SVNT in qualitative mode, it becomes apparent that the heightened binding to syncytin-1 appears before the vaccine has had time to prompt the generation of any SARS-CoV-2-s-protein antibodies at all.

Now we may return to the metaphor of the catastrophe of the newly purchased house struck by the mallet. The relative, easily-ranked results in Figure 2B may not verify Wodarg’s speculation: in fact they may imply something more harmless - a temporary IgE-antibody response to syncytin-1, that will only imply short-term potential reproductive toxicology - or something even worse. Critically, the day 1-4 results are measuring how the subjects’ plasma replies to syncytin-1 before there has been any time to for the anti-SARS-CoV-2-s-protein IgM and IgG antibodies promoted by the vaccine - the antibodies Wodarg suggested would potentially be sensitive to syncytin-1 - to appear in any detectable form. This conclusion is not only mandated by the current understanding of humoral immunity,15 but reinforced by the authors’ own GenScript SVNT results. The Day 1-4 results thus suggest a more immediate proliferation of targeted anti-syncytin-1 antibodies, which then sustains itself for weeks (or is supplanted by the arrival of s-protein-specific free antibodies fulfilling Wodarg’s original predection).

This opens up an array of questions that the study fails to provide answers for: How much increased syncytin-1 binding would have been observed immediately before the second dose, in week 3? How much would have been observed in weeks 4-7 without the second dose (the two subjects included midway, after discovery of pregnancy, met this condition; their results are nonetheless not singled out)? How long would increased syncytin-1 binding have been observed if the subjects had continued to be tested after the 7 week cutoff? How much would be observed if they were tested now? Why - why - would you set a cutoff of 7 weeks when measuring the effect of a medical intervention on plasma antigen-response, unless you had assumed from the start that no effect would be observed at any point?

The “lone-wolf” doctors and researchers who have been expressing skepticism about the vaccine have, recently, amplified their concerns about the evident post-vaccination proliferation of the mRNA package throughout the body, and likely associated production and expression of clot-promoting s-protein by vascular epithelial cells wherever the mRNA package ends up.16 Is this the effect that the Singapore study has unintentionally detected? If so, then any other broad, unexpected antigenic upticks activated by an s-protein-induced vascular auto-immune response would obviously have gone undetected. The study’s focus was too narrow.

Of course, this sword cuts no less sharply on the other end: What if, as suggested in the caveats laid out in the beginning of this essay, the elevated binding to syncytin-1 in these 15 subjects had nothing to do with the SARS-CoV-2-s-protein, or the mRNA instruction set for the same, at all? What if it instead was a response to something in the Pfizer excipient cocktail? And what if that ingredient is shared with other Covid-19 vaccines, or other modern vaccines in general, or other modern drugs? If an elevated immune response to syncytin-1 could be so easily discovered within the blindspot of this novel vaccine, how do we know which other recently developed vaccines and drugs don’t have the same effect skulking their eight o’clock? Who is to say that the baseline reactivity observed by this study on Day 0, before the administration of the Pfizer Covid-19 vaccine, was not already significant itself?17

And of course, as regards the biggest question of all, the study could never have possibly told us anything: What clinical effect will the observed increased syncytin-1 binding have on these 15 women in Singapore? No lone squadron of ELISA tests could determine that; it will require years of observation, and potential tragedy. For them, and for every other Covid-19 vaccine recipient in the world.

If the GenScript SVNT results actually make the anti-syncytin-1 binding observations more, not less, surprising (and, if not more ominous, then at best differently ominous), why does the interpretation construed by the authors in Figure 2 amount to redoubled dismissal?

All that is meaningfully established by the GenScript SVNT tests in the Singapore study is that the vaccine seroconversion timeline proceedes entirely as expected; these results in no way directly contribute to the brief of “Addressing anti-syncytin antibody levels, and fertility and breastfeeding concerns,” as defined by the title of the study. So, why construct this confusing, side-by-side presentation of two incomparable sets of values - why so strongly imply that they are equally germane to the question the study was seeing to answer? It could merely be an innocent, rushed graphical design decision, or a default setting on their templates, with an unintentionally confusing result - or it could be intentionally confusing.

Were this and all the other bizarre choices made by the authors, in other words, “significant,” or accidental? We can only speculate. But we must be careful not to take too far the imagination, offered earlier, that the authors buried their confession of the lack of a knowable Optical Density standard for clinical significance halfway into their technocratic ELISA recipe for the purposes of hiding this confession. After all, there was no absolute need for the authors to include the statement in the study at all.

Despite the heavy-handed and transparent misrepresentations appearing elsewhere in the study, which are professionally embarrassing, this statement, and its placement, could instead be interpreted as being for the benefit of, and seeking to make the authors look competent in the eye of, fellow researchers who have experience with ELISA. Some applications of ELISA, we have learned, have standard procedures calibrated to understood statistical thresholds of significance; some can be calibrated arbitrarily to maximize sensitivity, in which case the clinical significance of the resulting measurement cannot be known. The authors, we could suppose, are merely showing their work: this ELISA was calibrated arbitrarily for the purposes of maximum difference-sensitivity, and the industry recommendation was one of the elements used to determine calibration - and why was it reasonable to do this? Because “there is no data on clinically-significant thresholds of anti-syncytin-1 antibodies.” Except, as we’ve already discussed, the positive control was not determinative. It was a quality-control benchmark to flag any results that would have needed to be thrown out and re-tested. It didn’t need to be mentioned in the study at all, any more than an offhand remark that “Kyle did not get ketchup on the plates this time.” Thus, although it is offered as the explanation for one of the most misleading choices the authors made, the confession reads more like a smuggled warning to the world.18

Additionally, this is not the only moment when the authors seem to walk back the absolutism of their assertions that their results were not “significant,” and gesture toward something like alarm and dismay over the implications of their work. Perhaps the nagging awareness of everything that is encompassed in the reproductive toxicity assessments applied by the FDA to most drugs, but not to these mRNA vaccines - a.k.a. having a baby, that doesn’t die - perhaps this was too awful for the authors to suppress completely.

This second incidence of epistemic humility, in fact, appears in the Discussion section; the authors choose it as their closing remark:

Very well. Let’s discuss.

The belle of the ball: Mattar, C. et. al. Addressing anti-syncytin antibody levels, and fertility and breastfeeding concerns, following BNT162B2 COVID-19 mRNA vaccination

Singapore initiated mass vaccination in mid-December with the Pfizer Covid-19 Vaccine, including immediate available vaccinations for healthcare workers. Reports of healthcare worker vaccine hesitancy, including specific fears regarding fertility implications, may explain why the subjects recruited for this study skew older. From Coronavirus: could Singapore’s vaccine drive become a victim of the city’s own success? (2021, January 20). South China Morning Post:

“Local media reports have suggested that vaccine hesitancy exists even among high-risk groups such as health care workers and frontline staff. “Joanne”, a nurse at a public hospital, told This Week in Asia she was not “superbly keen” on the vaccine, partly because she was trying for a baby but also because she felt she could afford to “observe” how things went. (…)

[News reports of the efficacy against SARS-CoV-2 were] what nudged Daphne Loo, 37, who works as a Covid-19 swabber for the government, into action. She initially had concerns about the vaccine but had a rethink after researching the science behind it. When she was offered the jab two weeks ago, she took her chance. “It wasn’t a hard decision to make,” she said. “I chose to get vaccinated because I truly believe that the only way we can be rid of this virus is to have as many people vaccinated as possible.”

See Hibi, Y. et. al. High prevalence of the antibody against Syncytin-1 in schizophrenia, or just google “syncytin-1 schizophrenia.”

There is no data on clinically-significant thresholds of anti-syncytin-1 antibodies!

It is called “blocking ELISA detection.” A very readable description of the process appears in the user protocol manual for the Genscript SVNT test (see PDF, p. 2, “III. ASSAY PRINCIPLE”).

In the case of this study, the “antigen” is the syncytin-1 protein, which, once again, is critical to cell-binding during placental formation and appears important for neurological health.

Huzzah! We won’t let those high-falutin “Scientists” intimidate us with their calculations and measurements and what not! I jest, of course. Be very afraid.

ibid., Summary.

See, again, footnote 7. Blocking ELISA detection is elegant in concept, in that the antibody (or sample being qualitatively tested for antibody) is given a chance to bind to the antigen, and then the antigen’s target protein (the ACE2 receptor, in this case) is exposed to the mixture of both. Rather than trying to see if the scissors can cut the guitar strings, it asks the audience if they just heard Wonderwall. In practice, both methodologies must be necessarily calibrated according to real-world clinical and statistical data in order to end up with a proxy for functional immunity; however, blocking ELISA detection might provide stronger, more stable provisional validity according to my own view of the concept.

Observations of seroconversion - the arrival of detectable free IgM and IgG antibodies in the blood - can provide such noisy signals with SARS-CoV-2 and many other viruses, that they challenge our current account of the entire immune learning process; but the signals never begin within the first week of an infection. See Bauer, G. The variability of the serological response to SARS corona virus‐2: Potential resolution of ambiguity through determination of avidity (functional affinity).

See the testimony of Professor Byram Bridle, “MP Derek Sloan raises concerns about censorship of doctors and scientists,” press conference, (2021, June 17). See also the interview of Professor Sucharit Bhakdi with Journeyman Pictures, (2021, April 16). His view, which is shared by others, of where we can expect the Pfizer and Moderna mRNA package to go - namely that it will be trapped in the bloodstream, and must be taken up by vascular epithelial cells, so that vessels throughout the body will be littered with s-proteins that activate platelets/clotting, and byproducts that promote immune response/inflammation - begins at the 15 minute mark. Don’t worry, though. Blood vessels are not located in any of really important parts of the body.

There is no data on on clinically-significant thresholds of anti-syncytin-1 antibodies!

Of course, even if the positive control was not relevant to the overall context of the authors’ ELISA calibration, that does not mean that that same overall context was not informed by the lack of an established clinical standard for anti-syncytin-1 response, or that the authors did not have some justification for explaining their calibration in terms of the lack of the standard. The clause “There is no data…” could have been attached to almost any part of the paragraph. Thus it remains possible that the authors chose to place it in the middle to “bury” it, and the misleading description of their “positive control” just happened to be within the bullseye. It is no less possible that the “There is no data…” clause was “buried” first, before the positive control description was added, and later the authors selected it as the most attractive candidate when seeking to craft a “causative” account for their misleading presentation of the positive control. Whenever an act is committed with multiple, and evolving intentions, including the intention of signaling intention, one can afterward mind-read the actors’ intentions in a million ways. I attempted at first to favor a construction that presumed the authors’ intention-signaling rubric prioritized how the study would look to other researchers, talked myself out of it, and, like the authors, failed to resolve my own inconsistent intentions within the paragraph.

Seems to me you know what you're talking about. But you're too much in love with writing and English and too unrestrained to get your message across to us.

The discourse appears to be about three things:

The people who did the test and how the did it

The author himself

The results of the test.

And in the finish I don't know the unequivocal scientific message as pertains to the result.

Which is actually the only thing I'm concerned about.

So I seize upon this:

" as regards the biggest question of all, the study could never have possibly told us anything"

and use that as the take home message: the test is meaningless.

Right?

So, probably couldn't pass a quiz on the science here but definitely appreciate the 'laugh out loud' humor.