The definitive American childhood mortality reduction graph

An improvement to the previous post in the childhood mortality series.

Two-thirds of the way into my series designed to “improve” on the approach of “look graphs: disease deaths go down without vaccines,” I was gripped with a sudden and obsessive need to create my own, definitive version of

“Look graphs: disease deaths go down without vaccines.”

First, here is the yearly graph. There are of course important notes on design and method which will be provided below.

The reader can surely tell at a glance which diseases were alleviated thanks to vaccines and antibiotics

This is sarcasm. There are few obvious deviations from the overall trend, and only one which plausibly relates to historical medical developments (diphtheria, with antitoxin treatment arguably accounting for the exceptionally steep and sustained decline from 1900 - 1910).

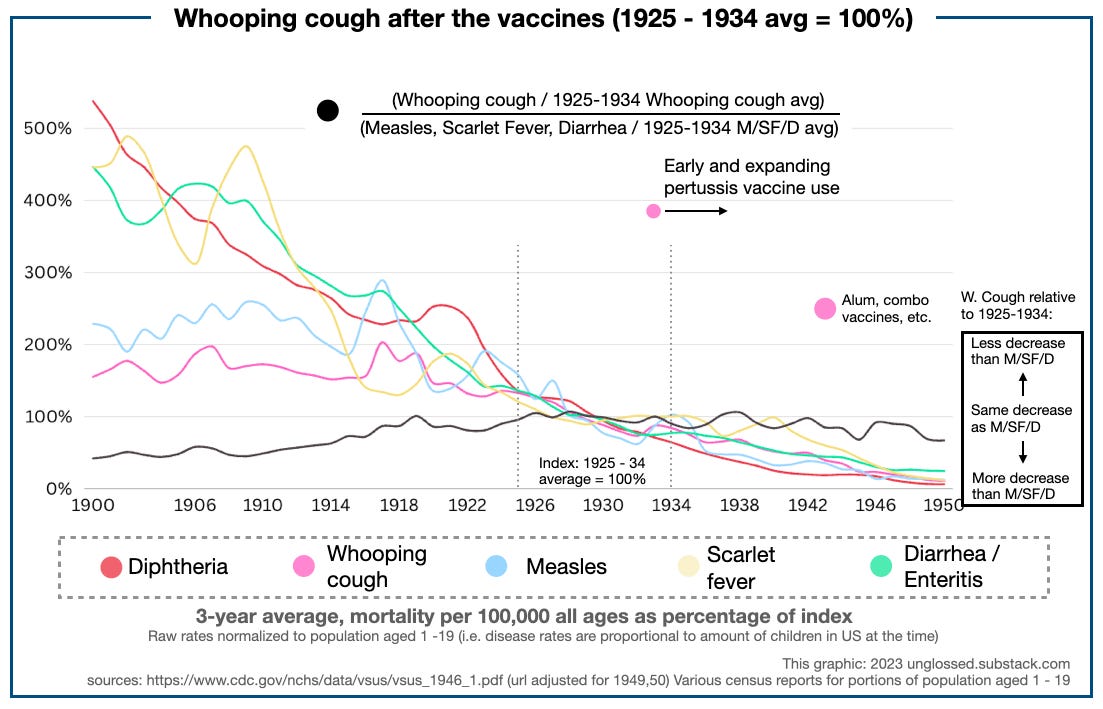

Here is the smoothed, 3-year average view, along with notes on relevant vaccines and antibiotics:

No vaccine, I feel, can credibly account for the declines of any disease. While diphtheria and whooping cough do experience inflections after the development of their respective vaccines, these infections do not deviate from more long-standing trends (diphtheria since 1906; whooping cough since 1920); instead they are corrections to recent elevated rates. Said elevated rates for whooping cough, in fact, occur while the vaccine is in limited but not insubstantial use in certain municipalities (1933 - 1938, when Sauer’s vaccine is being used in Chicago and elsewhere). Only with diphtheria can a very generous case be made for hypothetical reductions. This can be done by changing the “100%” index to a different decade — another useful feature of this method.

What reduced mortality?

These are not the first “look graphs: deaths go down without vaccines” graphs ever made. They are merely my own. I believe the use of 100% indexes for each disease offers an improvement to previous approaches, however — this reveals a great, overarching symmetry among diseases that had very different scales of per-capita mortality. Adjusting the “index” decade to 1911 - 1920, this symmetry becomes even more apparent:

For the sake of making this a more complete stand-alone post, I will include comments on the drivers of these declines which many readers will already be familiar with. These mortality reductions reflect not advances in medicine — either no relevant advances occurred for the diseases in question, or the relevant advances were not substantially employed1 — but rather advances in urban infrastructure and general standard of living.

Special comment is only required for the four diseases which had remarkable declines before 1919: Typhoid fever and enteritis were subdued thanks to improvements in food quality, especially of milk. Scarlet fever is believed to have “self-attenuated;” in other words, a tendency for periodic incidence of more virulent disease mysteriously ceased. Diphtheria’s decline is plausibly a reflection of antitoxin treatment (strain surveys from later years do not support the notion that the more severe strains disappeared from prevalence at any point from 1900 - 1940 or so; they may have done so afterward).

After 1919, all mortalities decline at a consistent rate of 2 to 3% per year from the 1911 - 1920 average. Overall childhood mortality declines consistently in kind. No relevant vaccines are involved besides the arguable case of diphtheria.

This occurs despite the Depression, WWII, and post-War recession — but still can generally be credited to improvements in standard-of-living, when one keeps in mind conditions in the first two decades associated with immigrant slums, child labor, etc.

Thus I would argue that the most substantial driver of this decline in America was the closing of the borders from 1917 - 1924, which deprived urban centers of the “oxygen” of childhood mortality, the slum. Reduced immigration also, of course, tempered the extraordinary skew of the age distribution at the turn of the century, when over 40% of American residents were under age 20.2

(As remarked below, this reduction is adjusted for throughout the timespan, so that mortality rates are proportional to the population aged 1-19.)

In Europe, urbanization also slows after 1914 as a result of reduced migration from rural areas, thus explaining parallel mortality reductions in those nations.3

Now for notes:

i. Narrative background

In great part, I was unhappy with the quality of my analysis, last post, regarding the evidence for diphtheria vaccine mortality reductions and pertussis vaccine non-mortality reductions. I also wanted to build some math for a prediction of how many deaths might have “been being spared” by the diphtheria vaccine in 1951 - 1953, so that my dismissal of all other vaccines (besides polio) would not be corrupted by unfair framing. In short I needed raw data, to ink and shade-in my vague, unsatisfactory sketch of the truth.

Prompted by this obsession, I kept casting about for earlier US vital statistics reports on the CDC domain, thereby discovering the 1946 report, which gives yearly all-age by-cause mortality rates from 1900. The latter publication was combined with figures reported in 1949 and 1950, to populate a spreadsheet with 51 total years of American mortality statistics for eight separate causes of death related to childhood infectious disease mortality.

This was used to make some graphs. The graphs were used to add improvements to the previous post. The previous post, as a result, is even more unfocused than originally. As such, I am giving the graphs their own separate home here.

Once I have hit publish on this stub, I will hopefully be able to wrap the draft of my examination of mortality reductions from 1953 - 2003 a few hours afterward.

ii. Which diseases included

Diseases were selected on the simple basis of driving deaths in younger ages in individual vital statistics reports before 1951, and consistently driving >1 mortality rates in the 1946 yearly review. This led to the inclusion of “diarrhea / enteritis,” which as can be seen in the first graph accounted for as much mortality as the next six diseases put together in 1900 - 1909. Newspaper accounts affirm that “enteritis” was often used to describe epidemic mortality (in humans and livestock) throughout the entire period in question.

Three4 diseases meeting this qualification were left out:

Malaria is an important driver of overall and childhood deaths in most of the years sampled. I considered it to be uninteresting, since it is transmitted by mosquito, and mortality was eliminated virtually overnight by the Public Health Service DDT campaigns. It may be noted that there was some decline in mortality before the 1940s, but not much.

Another important choice related to the translation of all-ages trends to unspecified childhood mortality rates for diseases shared by children and adults alike (those in the grey rectangle).

Thus, (influenza and) pneumonia was even more relevant in terms of childhood mortality, often the top cause in ages 1 - 5 in the 1940s (17.4% of all deaths in, for example, 1949), but I have also left it out. Since pneumonia deaths typically peak in late age, I felt that the natural mortality trend in children could not be reflected by all-ages rates, as the portion of Americans surviving to (and dying in) old age increased substantially during the decades in question, leading to increased all-ages mortality for deaths of old age (cardiovascular and cancer). Additionally, influenza complicates the situation by driving extra deaths from 1918 - 1924 or so — which is to say, by H1N1 “entering the arena late.” Overall, pneumonia may be contrasted with tuberculosis, which is also “significant in childhood mortality, but primarily a killer of adults” — but, has a great affinity for sending younger adults to the grave (RIP Chopin).

Finally, polio was excluded because it shares none of the characteristics of my set of infectious diseases, or what one might call “general infectious diseases” — polio mortality did not and could not “decline naturally” between 1900 and 1950, because it emerged and increased in this same period, because it was not caused naturally.

Nor are medical developments irrelevant for polio — they both exacerbated and reduced mortality before 1954, and then eliminated it afterward. As such none of the conclusions one might draw from my “look graphs” graph regarding medicine and vaccines apply to polio, nor do the example of polio and the polio vaccines apply to diseases broadly. This is all further discussed in my ongoing “Explaining Polio” series.

iii. Methodology schematic

With these choices made, I took the following approach with the data:

iv. Normalizing for the portion of children

I did not want it to be the case that mortality declines in the true childhood diseases (the pink rectangle) were simply being driven by and reflecting the aging of the population (essentially, the opposite of the problem with pneumonia). To “hide” all the reductions that resulted from there simply being fewer children per-capita to experience childhood disease, the raw rates were normalized for the portion of the population aged 1 - 19 in census reports. Thus, the declines in diphtheria, whooping coughs, measles, and scarlet fever can at all times be expected to translate consistently to relative true declines in deaths among children, since few adults died from these causes. Overall there was not much difference in the adjusted and unadjusted results anyway.

v. Changing the index year for other insights

A second advantage of plotting all diseases in percentage terms is that a different index period can be chosen for any / all diseases. This allowed for examining the potential influence of the diphtheria and pertussis vaccines in finer detail. Just for an example of what is possible, I chose 1925 - 1934 as the index decade for the pertussis vaccine, as experimental use takes place after 1932. Even if widespread use does not begin for another decade, limited use ought to show some influence on overall childhood mortality. To look for this “influence,” the plotted percentage rate of whooping cough can simply be divided by the average percentage rates of measles, scarlet fever, and enteritis:

Bearing in mind that whooping cough mainly kills in the first year of life, this raises interesting questions: Is the problem simply that vaccinating in infancy is pragmatically futile? Or was there perhaps an enhancing effect in older siblings of at-risk infants, as later observed with the acellular vaccine? Either way, it is clear that whooping cough declines did not outpace the general trend, and underperformed declines in measles — which had no vaccine (though there was some use of serum treatment in this era).

Since I have made the raw data available (google sheets), to save the reader having to do their own rebuild of the vital statistics pdfs, perhaps there are other creative uses to be found.

If you derived value from this post, please drop a few coins in your fact-barista’s tip jar.

Save, again, for diphtheria.

I used table two in the 1940 census summary for all decades except 1950 - https://www2.census.gov/library/publications/decennial/1940/population-volume-4/33973538v4p1ch1.pdf

The original version of this post neglected to explain the exclusion of polio.

Thanks for this. As someone who works in municipal services (drinking water, wastewater treatment) I do suspect that improvements to these services helped health in general, and reduced spread. But I also suspect nutrition improvements play a role too.

I think it’s due to Climate Change 😀

Kids diseases look to be inversely proportional to CO2. All that extra CO2 in the atmosphere must have changed the pH of the kids mucosal layer or blood or carbonated the water supply or something. Maybe, warmer weather is good for kids.🤷♂️