Follow-up to "The Danger of AI"

Shumailov, et al. (a weeks-old paper) confirms that LLM output will poison future LLM input.

Commenter “Orlando” brought to light pre-existing discussion of the problem I termed “Iterative LLM-inaccuracy” in yesterday’s post — as I said, it is an obvious problem, and so it is not surprising that AI insiders are aware.

Yesterday’s post:

And the article flagged by Orlando:

The Venture Beat article is prompted by a paper uploaded at the end of last month by AI researchers.

Also mentioned, I would note, is a tangential reference to the problem by sci-fi author Ted Chiang, in a New Yorker essay from February. Chiang’s perspective, however, is more that LLMs are not high-fidelity enough to rely on for information as-is, and the presumed future desire to exclude LLM-generated content from LLM-training can be taken as a testament to a lack of confidence in the product:

There is very little information available about OpenAI’s forthcoming successor to ChatGPT, GPT-4. But I’m going to make a prediction: when assembling the vast amount of text used to train GPT-4, the people at OpenAI will have made every effort to exclude material generated by ChatGPT or any other large language model. If this turns out to be the case, it will serve as unintentional confirmation that the analogy between large language models and lossy compression is useful. Repeatedly resaving a jpeg creates more compression artifacts, because more information is lost every time. It’s the digital equivalent of repeatedly making photocopies of photocopies in the old days. The image quality only gets worse.

Chiang isn’t particularly considering, or at least not highlighting, the predicament that will arise when OpenAI actually has to attempt such an exclusion, and yet the web is now already flooded with new, ChatGPT-produced content that is vastly more difficult to distinguish from human content than were bot-generated texts in the September, 2021 dataset.

Therefor it seems that I was pretty close to being able to claim “first” on the problem of fatal Iterative LLM-inaccuracy / “Model Collapse” highlighted in the paper that prompted the Venture Beat article. Ah, if only I had seem the gas pump infotainment video a few weeks earlier…

In this follow-up post, I’ll highlight some of the text from Shumailov, et al.; this post will act as an “appendix” to yesterday’s, as the focus of the latter remains not that Model Collapse is a big deal in of itself, but that LLMs will first seduce humans into letting specific, organic knowledge systems wither for some number of months or years (by displacing individual humans currently being paid just to know mundane things) before collapse degrades information quality substantially, and economic and political incentives may not allow for a switch back to fully-human knowledge distribution; we will just be stuck with institutions that lack accurate and relevant knowledge, forever.

I would also like to highlight and probably inaccurately summarize comments by Jon, who writes at Notebooks of an Inflamed Cynic, that suggest ways LLM-ified information degradation (not via the successful invasion of business, but open-source horseplay) can be a good thing, simply by accelerating the conversion of the internet into something entirely devoid of possible use.

So, well before AI enters a doom-loop of information degradation due to training on its own outputs, it will hit the performance wall due to its own natural limitations and the added sand in the gears of human administration and politics and having to have somebody looking over its shoulder all the time.

To me, this doesn't actually get interesting until the open source versions really start to make their presence known, and then not for the reasons of "techno-singularity" but just because the levels of trolling and shitposting possible at scale at that point may actually push the whole internet into a completely unusable limit cycle (seems like we're already a good part of the way there without any AI assistance).

This prompted my own proposal (down-thread) that perhaps economic incentives for not returning to human information systems will only pervade at the corporate level, making corporations unable to compete with smaller businesses that can resist the same incentives (admittedly a utopian hypothetical). Perhaps LLMs will so poison consolidated knowledge that localism is the only possible functional economic mode left.

Excerpts from Shumailov, et al.

Yet while current LLMs [Devlin et al., 2018, Liu et al., 2019, Brown et al., 2020, Zhang et al., 2022], including GPT-4, were trained on predominantly human-generated text, this may change in the future. If most future models’ training data is also scraped from the web, then they will inevitably come to train on data produced by their predecessors. In this paper, we investigate what happens when text produced, e.g. by a version of GPT, forms most of the training dataset of following models. What happens to GPT versions GPT-{n} as generation n increases?

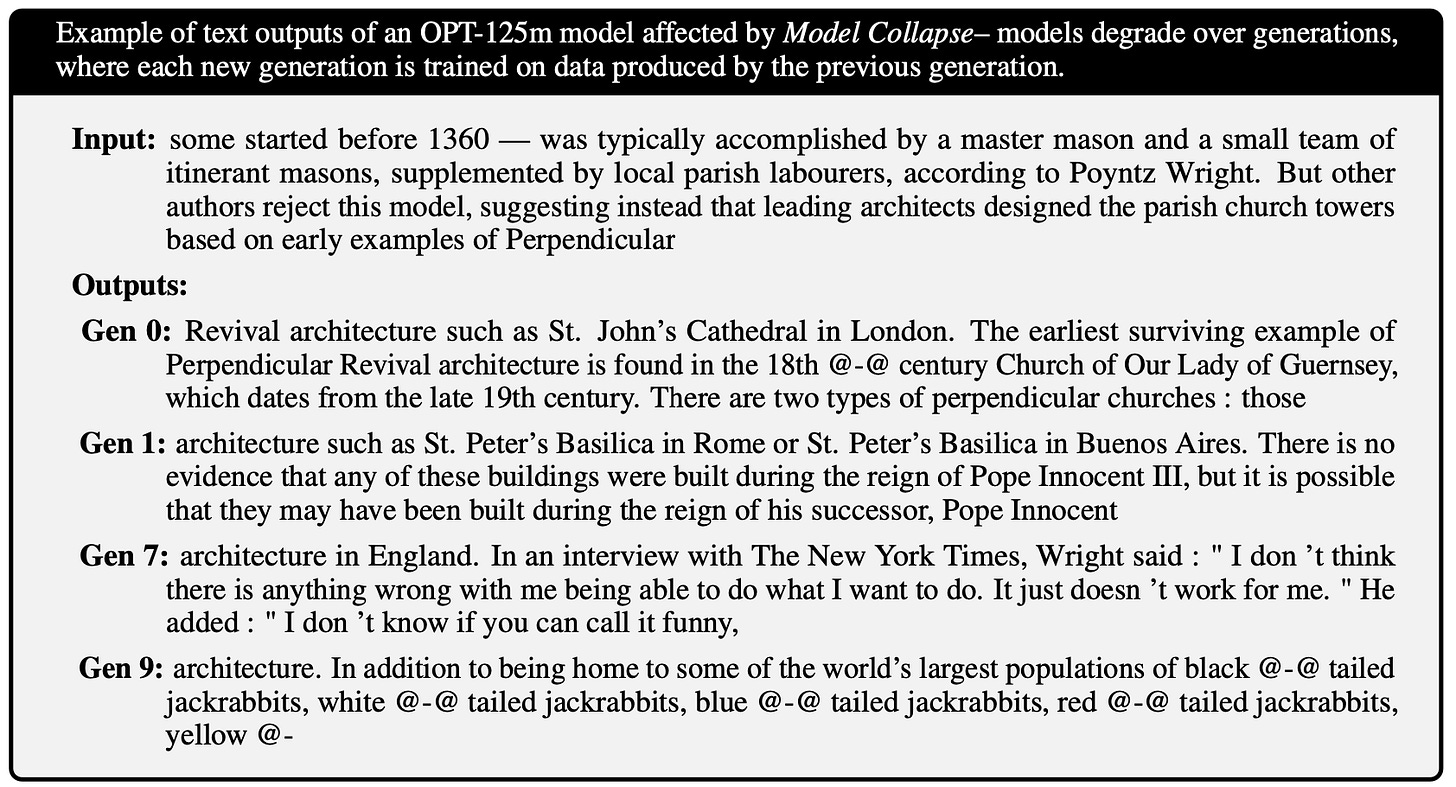

We discover that learning from data produced by other models causes model collapse – a degenerative process whereby, over time, models forget the true underlying data distribution, even in the absence of a shift in the distribution over time. […]

We show that over time we start losing information about the true distribution, which first starts with tails disappearing, and over the generations learned behaviours start converging to a point estimate with very small variance. Furthermore, we show that this process is inevitable, even for cases with almost ideal conditions for long-term learning i.e. no function estimation error. […]

We show that, to avoid model collapse, access to genuine human-generated content is essential.

Just as I described the problem of iterative inaccuracy as “inescapable” based on theory, the authors describe it as “inevitable” based on simulation and maths.

Anyhow, what Shumailov, et al. find is that iterative model-training tends to homogenize. When training on a human-generated library of knowledge, common examples of any concept are over-sampled; rare examples under-sampled. Impressively, this problem infects image-based models as well:

However, it’s not overwhelmingly obvious that there will be much user need for keeping image-generating AIs trained on images from after 2022; or that it will be substantially difficult to hand-curate which images are added to the original training library (e.g., to add a new celebrity whenever needed).

It is with LLMs, in my opinion, that the product will enter applications where future updates are critical to retain user value, but impossible without including substantial AI-generated texts of dubious accuracy.

Homogenize or Nonsense-ify?

Regarding homogenization, while Shumailov, et al. do demonstrate it in the LLM context in their simulations, this ignores that human applications of LLM will seek to amplify unpredictable elements within the training data. One of the most currently-prized features of Chat-GPT and GPT-4 is their ability to generate creative answers to questions simple and complex; to answer the question, “What have I missed?”

If these are the types of replies being solicited from the LLM, then future training materials will over-sample rarer examples, as well as myriad erroneous answers invented to satisfy prompts (i.e. hallucinations) and nonetheless published uncritically onto the internet. My assumption, in fact, is that this will be the most immediate mutagenic effect of LLM self-training. Shumailov, et al. also seemingly acknowledge it as a possibility:

Each of the above can cause model collapse to get worse or better. Better approximation power can even be a double- edged sword – better expressiveness may counteract statistical noise, resulting in a good approximation of the true distribution, but it can equally compound this noise. More often then not, we get a cascading effect where combined individual inaccuracy causes the overall error to grow. Overfitting the density model will cause the model to extrapolate incorrectly and might give high density to low-density regions not covered in the training set support; these will then be sampled with arbitrary frequency.

Indeed, what their example simulation of iterative training eventually produces is half-garbled nonsense, as tiny inaccuracies compound out-of-control:

Possible Countermeasures?

Bleakly, they write:

To make sure that learning is sustained over a long time period, one needs to make sure that access to the original data source is preserved and that additional data not generated by LLMs remain available over time. The need to distinguish data generated by LLMs from other data raises questions around the provenance of content that is crawled from the Internet: it is unclear how content generated by LLMs can be tracked at scale.

One option is community-wide coordination to ensure that different parties involved in LLM creation and deployment share the information needed to resolve questions of provenance. Otherwise, it may become increasingly difficult to train newer versions of LLMs without access to data that was crawled from the Internet prior to the mass adoption of the technology, or direct access to data generated by humans at scale.

If “access to genuine human-generated content is essential” to avoid Model Collapse, it is by extension essential to be able to sustain production of human-generated content and to be able to identify it. But LLMs are poised to make both conditions impossible.

All that Shumailov, et al. can offer in answer to this paradox is a platitude in favor of collaboration; and a vague proposal for direct access to human-generated data (as would be possible in fields like medicine and sports, as already mentioned in yesterday’s post; but will do little to keep knowledge systems functional in more complex economic and social spheres).

If you derived value from this post, please drop a few coins in your fact-barista’s tip jar.

The feedback loop, a.k.a. too much recursion. I have a great graphic to illustrate this; maybe I can manage to post it in Notes...yep! https://substack.com/profile/4958635-tardigrade/note/c-17550793

I agree with this and your own theorizing wholeheartedly. In fact I think people take for granted just how much rnon-AI, human originated data and feedback is needed to make AI / ML function well in the first place. For example, very few outside of the AI / ML space know that if you are building something from scratch, without a pre-trained model, or to train on a new category of data, you actually need humans to label that data. As such, almost no ones this fact: https://time.com/6247678/openai-chatgpt-kenya-workers/

""Exclusive: OpenAI Used Kenyan Workers on Less Than $2 Per Hour to Make ChatGPT Less Toxic"

"The premise was simple: feed an AI with labeled examples of violence, hate speech, and sexual abuse, and that tool could learn to detect those forms of toxicity in the wild ...

To get those labels, OpenAI sent tens of thousands of snippets of text to an outsourcing firm in Kenya, beginning in November 2021. Much of that text appeared to have been pulled from the darkest recesses of the internet ...

The data labelers employed by Sama on behalf of OpenAI were paid a take-home wage of between around $1.32 and $2 per hour depending on seniority and performance ..."

(the company, Sama, hired 50,000 workers)

So aside from the raw human supplied data needed, it's not just the manual labor or labeling or categorization work that's needed, it's also the data weights (weighing the importance of the billions of parameters) assigned to a model needed to work and that influences output. The exact same AI / ML model with any of those being different will result in different output. And there's no programmatic model to "know" any of those in the first place (i.e. no epistemological algorithm). Essentially since the model is considered the "core" of whatever AI tech one is referring to, an analogy would be if a person completely--and genuinely-- changes personality depending on the clothes he wears, giving different responses to the same question depending on that clothing, all unbeknownst to that very person.

There's a reason why Human In The Loop modeling exists, humans need to judge the data and output and provide feedback to prevent model breakdown. See: https://research.aimultiple.com/human-in-the-loop/

I made the same comments about model breakdown elsewhere in a tech community using the same image-generation example over a month ago. I had posited that a "degeneration" would occur of you starting training AI / ML on AI output in a closed feedback loop:

What I mean by degeneration is for example, that initially AI models would know what a real cat looks like. But then limit its dataset to ONLY generative AI output which would start generating fictitious cats. Again, given this is closed system, then only those would be used to recognize what a cat is, and as the amount of fictiously generated cat images increases without humans in the loop in this closed system, all the models bouncing training input and generative output data back between each other, they would no longer be able to recognize nor generate a real cat.

Here's one example of model breakdown without proper categorization and sample data when using AI image generators to do style transfers i.e. transform a real world photo into some art style such as oil painting or in this case, anime/manga style

https://www.reddit.com/r/lostpause/comments/zbju2i/i_thought_the_new_ai_painting_art_changes_people/

It really highlights how model programming and training data influences outcomes (both of which, again, are human created), including limitations that may not have been considered.

The limbless man above is Nick Vujicic and the broken result from prompts to transform a pic to artform--a process involving Attribute Transfer-- is almost guaranteed to be because the closest match in its model training data was the blue suitcase. This is even though all of the attributes broken down likely have very low weights that was still the only suitable match. If its training data had included handicapped anime characters, especially limbless anime characters, then the inference would have picked up on those instead.

Human artists wouldn't make this mistake, and would be able to draw Nick Vujicic in anime/manga style, even WITHOUT ever having seen limbless anime character before.

Now imagine if there was no corrective feedback, no human intervention and the same AI model continues to train on AI output then that just further reinforces the case where handicapped and/or people with missing gets incorrectly seen as inanimate objects instead of humans.

This is exactly the same problem Tesla had a many years ago when researchers found stupid-simple ways to fool its self driving. One example was taking the 35 mph speed limit sign, and applying black tape down the left side of the "3" so that to human eyes it looks like a "B" e.. "B5". So after semantic segmentation -- a process that breaks down parts of the image/video into meaningful categories i.e. this is a human, this the road, this is a sign, after it recognizes the sign it, it recognizes it as a speed limit sign and so does OCR but limited to just numbers because after all, that's what speed limit signs are supposed to composed of just numbers.

So the closest match of the hacked sign was not "hey that 3 has obviously been tampered with to look like a B" , but rather a "8" to result in speeding the car up to 85 mph in the 35 mph zone. If you know how the modeling works, the neural network is fixed so that certain inputs travel along a certain path, through its layers, and there is simply no handling or recognition of outliers. In its image recognition neural network, once at the speed sign, at those layers, it's trained only to recognize numbers--there's no other choice, there's no path -- at that time at least -- that said, hey something's not right. Another similar trick to fool the self-driving AI was using lasers and holographic projection.